Mac's git installation ships with a system wide config that configures

the credential helper `osxkeychain`, which will prompt the user with a

dialog.

```

$ git config list --system

credential.helper=osxkeychain

```

By setting the environment variable

[`GIT_CONFIG_NOSYSTEM=true`](https://git-scm.com/docs/git-config#ENVIRONMENT),

Git will not load the system wide config, preventing the dialog from

populating.

Closes#26717

The R package repository currently does not have support for older

versions of packages which should be stored in a separate /Archive

router. This PR remedies that by adding a new path router.

I am a member of a group that loves using Gitea and this bug has been

annoying us for a long time. Hope it can be merged in time for Gitea

1.23.0.

Any feedback much appreciated.

Fixes#32782

1. use grid instead of table, completely drop "ui table" from that list

2. move some "commit sign" related styles into a new file by the way (no

change) because I need to figure out where `#repo-files-table` is used.

3. move legacy "branch/tag selector" related code into repo-legacy.ts,

now there are 13 `import $` files left.

Rearrange the clone panel to use less horizontal space.

The following changes have been made to achieve this:

- Moved everything into the dropdown menu

- Moved the HTTPS/SSH Switch to a separate line

- Moved the "Clone in VS Code"-Button up and added a divider

- Named the dropdown button "Code", added appropriate icon

---------

Co-authored-by: techknowlogick <techknowlogick@gitea.com>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Resolve#31492

The response time for the Pull Requests API has improved significantly,

dropping from over `2000ms` to about `350ms` on my local machine. It's

about `6` times faster.

A key area for further optimization lies in batch-fetching data for

`apiPullRequest.ChangedFiles, apiPullRequest.Additions, and

apiPullRequest.Deletions`.

Tests `TestAPIViewPulls` does exist and new tests added.

- This PR also fixes some bugs in `GetDiff` functions.

- This PR also fixes data inconsistent in test data. For a pull request,

the head branch's reference should be equal to the reference in

`pull/xxx/head`.

Redesign the time tracker side bar, and add "time estimate" support (in "1d 2m" format)

Closes#23112

---------

Co-authored-by: stuzer05 <stuzer05@gmail.com>

Co-authored-by: Yarden Shoham <hrsi88@gmail.com>

Co-authored-by: silverwind <me@silverwind.io>

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Usually enterprise/organization users would like to only allow OAuth2

login.

This PR adds a new config option to disable the password-based login

form. It is a simple and clear approach and won't block the future

login-system refactoring works.

Fix a TODO in #24821

Replace #21851Close#7633 , close#13606

Move all mail sender related codes into a sub package of

services/mailer. Just move, no code change.

Then we just have dependencies on go-mail package in the new sub

package. We can use other package to replace it because it's

unmaintainable. ref #18664

This fixes a TODO in the code to validate the RedirectURIs when adding

or editing an OAuth application in user settings.

This also includes a refactor of the user settings tests to only create

the DB once per top-level test to avoid reloading fixtures.

There are still some functions under `models` after last big refactor

about `models`. This change will move all team related functions to

service layer with no code change.

## Solves

Currently for rules to re-order them you have to alter the creation

date. so you basicly have to delete and recreate them in the right

order. This is more than just inconvinient ...

## Solution

Add a new col for prioritization

## Demo WebUI Video

https://github.com/user-attachments/assets/92182a31-9705-4ac5-b6e3-9bb74108cbd1

---

*Sponsored by Kithara Software GmbH*

This PR mainly removes some global variables, moves some code and

renames some functions to make code clearer.

This PR also removes a testing-only option ForceHardLineBreak during

refactoring since the behavior is clear now.

This PR removes (almost) all path tricks, and introduces "renderhelper"

package.

Now we can clearly see the rendering behaviors for comment/file/wiki,

more details are in "renderhelper" tests.

Fix#31411 , fix#18592, fix#25632 and maybe more problems. (ps: fix

#32608 by the way)

This PR rewrites `GetReviewer` function and move it to service layer.

Reviewers should not be watchers, so that this PR removed all watchers

from reviewers. When the repository is under an organization, the pull

request unit read permission will be checked to resolve the bug of

#32394Fix#32394

Resolve#31609

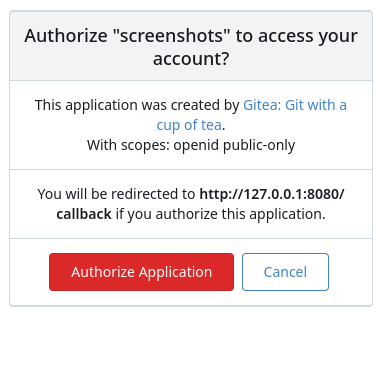

This PR was initiated following my personal research to find the

lightest possible Single Sign-On solution for self-hosted setups. The

existing solutions often seemed too enterprise-oriented, involving many

moving parts and services, demanding significant resources while

promising planetary-scale capabilities. Others were adequate in

supporting basic OAuth2 flows but lacked proper user management

features, such as a change password UI.

Gitea hits the sweet spot for me, provided it supports more granular

access permissions for resources under users who accept the OAuth2

application.

This PR aims to introduce granularity in handling user resources as

nonintrusively and simply as possible. It allows third parties to inform

users about their intent to not ask for the full access and instead

request a specific, reduced scope. If the provided scopes are **only**

the typical ones for OIDC/OAuth2—`openid`, `profile`, `email`, and

`groups`—everything remains unchanged (currently full access to user's

resources). Additionally, this PR supports processing scopes already

introduced with [personal

tokens](https://docs.gitea.com/development/oauth2-provider#scopes) (e.g.

`read:user`, `write:issue`, `read:group`, `write:repository`...)

Personal tokens define scopes around specific resources: user info,

repositories, issues, packages, organizations, notifications,

miscellaneous, admin, and activitypub, with access delineated by read

and/or write permissions.

The initial case I wanted to address was to have Gitea act as an OAuth2

Identity Provider. To achieve that, with this PR, I would only add

`openid public-only` to provide access token to the third party to

authenticate the Gitea's user but no further access to the API and users

resources.

Another example: if a third party wanted to interact solely with Issues,

it would need to add `read:user` (for authorization) and

`read:issue`/`write:issue` to manage Issues.

My approach is based on my understanding of how scopes can be utilized,

supported by examples like [Sample Use Cases: Scopes and

Claims](https://auth0.com/docs/get-started/apis/scopes/sample-use-cases-scopes-and-claims)

on auth0.com.

I renamed `CheckOAuthAccessToken` to `GetOAuthAccessTokenScopeAndUserID`

so now it returns AccessTokenScope and user's ID. In the case of

additional scopes in `userIDFromToken` the default `all` would be

reduced to whatever was asked via those scopes. The main difference is

the opportunity to reduce the permissions from `all`, as is currently

the case, to what is provided by the additional scopes described above.

Screenshots:

---------

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

When running e2e tests on flaky networks, gravatar can cause a timeout

and test failures. Turn off, and populate avatars on e2e test suite run

to make them reliable.

- Move models/GetForks to services/FindForks

- Add doer as a parameter of FindForks to check permissions

- Slight performance optimization for get forks API with batch loading

of repository units

- Add tests for forking repository to organizations

---------

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

By some CI fine tunes (`run tests`), SQLite & MSSQL could complete

in about 12~13 minutes (before > 14), MySQL could complete in 18 minutes

(before: about 23 or even > 30)

Major changes:

1. use tmpfs for MySQL storage

1. run `make test-mysql` instead of `make integration-test-coverage`

because the code coverage is not really used at the moment.

1. refactor testlogger to make it more reliable and be able to report

stuck stacktrace

1. do not requeue failed items when a queue is being flushed (failed

items would keep failing and make flush uncompleted)

1. reduce the file sizes for testing

1. use math ChaCha20 random data instead of crypot/rand (for testing

purpose only)

1. no need to `DeleteRepository` in `TestLinguist`

1. other related refactoring to make code easier to maintain

In profiling integration tests, I found a couple places where per-test

overhead could be reduced:

* Avoiding disk IO by synchronizing instead of deleting & copying test

Git repository data. This saves ~100ms per test on my machine

* When flushing queues in `PrintCurrentTest`, invoke `FlushWithContext`

in a parallel.

---------

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

There were too many patches to the Render system, it's really difficult

to make further improvements.

This PR clears the legacy problems and fix TODOs.

1. Rename `RenderContext.Type` to `RenderContext.MarkupType` to clarify

its usage.

2. Use `ContentMode` to replace `meta["mode"]` and `IsWiki`, to clarify

the rendering behaviors.

3. Use "wiki" mode instead of "mode=gfm + wiki=true"

4. Merge `renderByType` and `renderByFile`

5. Add more comments

----

The problem of "mode=document": in many cases it is not set, so many

non-comment places use comment's hard line break incorrectly

1. move "internal-lfs" route mock to "common-lfs"

2. fine tune tests

3. fix "realm" strings, according to RFC:

https://datatracker.ietf.org/doc/html/rfc2617:

* realm = "realm" "=" realm-value

* realm-value = quoted-string

4. clarify some names of the middlewares, rename `ignXxx` to `optXxx` to

match `reqXxx`, and rename ambiguous `requireSignIn` to `reqGitSignIn`

Gitea instance keeps reporting a lot of errors like "LFS SSH transfer connection denied, pure SSH protocol is disabled". When starting debugging the problem, there are more problems found. Try to address most of them:

* avoid unnecessary server side error logs (change `fail()` to not log them)

* figure out the broken tests/user2/lfs.git (added comments)

* avoid `migratePushMirrors` failure when a repository doesn't exist (ignore them)

* avoid "Authorization" (internal&lfs) header conflicts, remove the tricky "swapAuth" and use "X-Gitea-Internal-Auth"

* make internal token comparing constant time (it wasn't a serous problem because in a real world it's nearly impossible to timing-attack the token, but good to fix and backport)

* avoid duplicate routers (introduce AddOwnerRepoGitLFSRoutes)

* avoid "internal (private)" routes using session/web context (they should use private context)

* fix incorrect "path" usages (use "filepath")

* fix incorrect mocked route point handling (need to check func nil correctly)

* split some tests from "git general tests" to "git misc tests" (to keep "git_general_test.go" simple)

Still no correct result for Git LFS SSH tests. So the code is kept there

(`tests/integration/git_lfs_ssh_test.go`) and a FIXME explains the details.

From testing, I found that issue posters and users with repository write

access are able to edit attachment names in a way that circumvents the

instance-level file extension restrictions using the edit attachment

APIs. This snapshot adds checks for these endpoints.

Use zero instead of 9999-12-31 for deadline

Fix#32291

---------

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Co-authored-by: Giteabot <teabot@gitea.io>

1. clarify the "filepath" could(should) contain "{ref}"

2. remove unclear RepoRefLegacy and RepoRefAny, use RepoRefUnknown to guess

3. by the way, avoid using AppURL

Closes https://github.com/go-gitea/gitea/issues/30296

- Adds a DB fixture for actions artifacts

- Adds artifacts test files

- Clears artifacts test files between each run

- Note: I initially initialized the artifacts only for artifacts tests,

but because the files are small it only takes ~8ms, so I changed it to

always run in test setup for simplicity

- Fix some otherwise flaky tests by making them not depend on previous

tests

This introduces a new flag `BlockAdminMergeOverride` on the branch

protection rules that prevents admins/repo owners from bypassing branch

protection rules and merging without approvals or failing status checks.

Fixes#17131

---------

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Co-authored-by: Giteabot <teabot@gitea.io>

This is a large and complex PR, so let me explain in detail its changes.

First, I had to create new index mappings for Bleve and ElasticSerach as

the current ones do not support search by filename. This requires Gitea

to recreate the code search indexes (I do not know if this is a breaking

change, but I feel it deserves a heads-up).

I've used [this

approach](https://www.elastic.co/guide/en/elasticsearch/reference/7.17/analysis-pathhierarchy-tokenizer.html)

to model the filename index. It allows us to efficiently search for both

the full path and the name of a file. Bleve, however, does not support

this out-of-box, so I had to code a brand new [token

filter](https://blevesearch.com/docs/Token-Filters/) to generate the

search terms.

I also did an overhaul in the `indexer_test.go` file. It now asserts the

order of the expected results (this is important since matches based on

the name of a file are more relevant than those based on its content).

I've added new test scenarios that deal with searching by filename. They

use a new repo included in the Gitea fixture.

The screenshot below depicts how Gitea shows the search results. It

shows results based on content in the same way as the current version

does. In matches based on the filename, the first seven lines of the

file contents are shown (BTW, this is how GitHub does it).

Resolves#32096

---------

Signed-off-by: Bruno Sofiato <bruno.sofiato@gmail.com>

while testing i found out that testing locally as documented in the

changed README.md for pgsql isn't working because of the minio

dependency. reworked this to by default be still docker, but allow for

for local with only minio in docker and testing on bare metal.

also depending on this: fixed docs for running pgsql test

Closes: #32168 (by changing documentation for pgsql tests)

Closes: #32169 (by changing documentation, Makefile & pgsql.ini.tmpl:

adding {{TEST_MINIO_ENDPOINT}})

sry for the combined pr, but when testing I ran into this issue and

first thought they were related and now finally address the same

problem: not beeing able to run pgsql integration tests as described in

the according README.md

Since page templates keep changing, some pages that contained forms with

CSRF token no longer have them.

It leads to some calls of `GetCSRF` returning an empty string, which

fails the tests. Like

3269b04d61/tests/integration/attachment_test.go (L62-L63)

The test did try to get the CSRF token and provided it, but it was

empty.

Multiple chunks are uploaded with type "block" without using

"appendBlock" and eventually out of order for bigger uploads.

8MB seems to be the chunk size

This change parses the blockList uploaded after all blocks to get the

final artifact size and order them correctly before calculating the

sha256 checksum over all blocks

Fixes#31354

This PR addresses the missing `bin` field in Composer metadata, which

currently causes vendor-provided binaries to not be symlinked to

`vendor/bin` during installation.

In the current implementation, running `composer install` does not

publish the binaries, leading to issues where expected binaries are not

available.

By properly declaring the `bin` field, this PR ensures that binaries are

correctly symlinked upon installation, as described in the [Composer

documentation](https://getcomposer.org/doc/articles/vendor-binaries.md).

Remove unused CSRF options, decouple "new csrf protector" and "prepare"

logic, do not redirect to home page if CSRF validation falis (it

shouldn't happen in daily usage, if it happens, redirecting to home

doesn't help either but just makes the problem more complex for "fetch")

Fixes#31937

- Add missing comment reply handling

- Use `onGiteaRun` in the test because the fixtures are not present

otherwise (did this behaviour change?)

Compare without whitespaces.

A 500 status code was thrown when passing a non-existent target to the

create release API. This snapshot handles this error and instead throws

a 404 status code.

Discovered while working on #31840.

https://github.com/go-fed/httpsig seems to be unmaintained.

Switch to github.com/42wim/httpsig which has removed deprecated crypto

and default sha256 signing for ssh rsa.

No impact for those that use ed25519 ssh certificates.

This is a breaking change for:

- gitea.com/gitea/tea (go-sdk) - I'll be sending a PR there too

- activitypub using deprecated crypto (is this actually used?)

All refs under `refs/pull` should only be changed from Gitea inside but

not by pushing from outside of Gitea.

This PR will prevent the pull refs update but allow other refs to be

updated on the same pushing with `--mirror` operations.

The main changes are to add checks on `update` hook but not

`pre-receive` because `update` will be invoked by every ref but

`pre-receive` will revert all changes once one ref update fails.

We had an issue where a repo was using LFS to store a file, but the user

did not push the file. When trying to view the file, Gitea returned a

500 HTTP status code referencing `ErrLFSObjectNotExist`. It appears the

intent was the render this file as plain text, but the conditional was

flipped. I've also added a test to verify that the file is rendered as

plain text.

Support compression for Actions logs to save storage space and

bandwidth. Inspired by

https://github.com/go-gitea/gitea/issues/24256#issuecomment-1521153015

The biggest challenge is that the compression format should support

[seekable](https://github.com/facebook/zstd/blob/dev/contrib/seekable_format/zstd_seekable_compression_format.md).

So when users are viewing a part of the log lines, Gitea doesn't need to

download the whole compressed file and decompress it.

That means gzip cannot help here. And I did research, there aren't too

many choices, like bgzip and xz, but I think zstd is the most popular

one. It has an implementation in Golang with

[zstd](https://github.com/klauspost/compress/tree/master/zstd) and

[zstd-seekable-format-go](https://github.com/SaveTheRbtz/zstd-seekable-format-go),

and what is better is that it has good compatibility: a seekable format

zstd file can be read by a regular zstd reader.

This PR introduces a new package `zstd` to combine and wrap the two

packages, to provide a unified and easy-to-use API.

And a new setting `LOG_COMPRESSION` is added to the config, although I

don't see any reason why not to use compression, I think's it's a good

idea to keep the default with `none` to be consistent with old versions.

`LOG_COMPRESSION` takes effect for only new log files, it adds `.zst` as

an extension to the file name, so Gitea can determine if it needs

decompression according to the file name when reading. Old files will

keep the format since it's not worth converting them, as they will be

cleared after #31735.

<img width="541" alt="image"

src="https://github.com/user-attachments/assets/e9598764-a4e0-4b68-8c2b-f769265183c9">

close #27031

If the rpm package does not contain a matching gpg signature, the

installation will fail. See (#27031) , now auto-signing rpm uploads.

This option is turned off by default for compatibility.

Fix#31707.

Also related to #31715.

Some Actions resources could has different types of ownership. It could

be:

- global: all repos and orgs/users can use it.

- org/user level: only the org/user can use it.

- repo level: only the repo can use it.

There are two ways to distinguish org/user level from repo level:

1. `{owner_id: 1, repo_id: 2}` for repo level, and `{owner_id: 1,

repo_id: 0}` for org level.

2. `{owner_id: 0, repo_id: 2}` for repo level, and `{owner_id: 1,

repo_id: 0}` for org level.

The first way seems more reasonable, but it may not be true. The point

is that although a resource, like a runner, belongs to a repo (it can be

used by the repo), the runner doesn't belong to the repo's org (other

repos in the same org cannot use the runner). So, the second method

makes more sense.

And the first way is not user-friendly to query, we must set the repo id

to zero to avoid wrong results.

So, #31715 should be right. And the most simple way to fix#31707 is

just:

```diff

- shared.GetRegistrationToken(ctx, ctx.Repo.Repository.OwnerID, ctx.Repo.Repository.ID)

+ shared.GetRegistrationToken(ctx, 0, ctx.Repo.Repository.ID)

```

However, it is quite intuitive to set both owner id and repo id since

the repo belongs to the owner. So I prefer to be compatible with it. If

we get both owner id and repo id not zero when creating or finding, it's

very clear that the caller want one with repo level, but set owner id

accidentally. So it's OK to accept it but fix the owner id to zero.

See discussion on #31561 for some background.

The introspect endpoint was using the OIDC token itself for

authentication. This fixes it to use basic authentication with the

client ID and secret instead:

* Applications with a valid client ID and secret should be able to

successfully introspect an invalid token, receiving a 200 response

with JSON data that indicates the token is invalid

* Requests with an invalid client ID and secret should not be able

to introspect, even if the token itself is valid

Unlike #31561 (which just future-proofed the current behavior against

future changes to `DISABLE_QUERY_AUTH_TOKEN`), this is a potential

compatibility break (some introspection requests without valid client

IDs that would previously succeed will now fail). Affected deployments

must begin sending a valid HTTP basic authentication header with their

introspection requests, with the username set to a valid client ID and

the password set to the corresponding client secret.

This leverages the existing `sync_external_users` cron job to

synchronize the `IsActive` flag on users who use an OAuth2 provider set

to synchronize. This synchronization is done by checking for expired

access tokens, and using the stored refresh token to request a new

access token. If the response back from the OAuth2 provider is the

`invalid_grant` error code, the user is marked as inactive. However, the

user is able to reactivate their account by logging in the web browser

through their OAuth2 flow.

Also changed to support this is that a linked `ExternalLoginUser` is

always created upon a login or signup via OAuth2.

### Notes on updating permissions

Ideally, we would also refresh permissions from the configured OAuth

provider (e.g., admin, restricted and group mappings) to match the

implementation of LDAP. However, the OAuth library used for this `goth`,

doesn't seem to support issuing a session via refresh tokens. The

interface provides a [`RefreshToken`

method](https://github.com/markbates/goth/blob/master/provider.go#L20),

but the returned `oauth.Token` doesn't implement the `goth.Session` we

would need to call `FetchUser`. Due to specific implementations, we

would need to build a compatibility function for every provider, since

they cast to concrete types (e.g.

[Azure](https://github.com/markbates/goth/blob/master/providers/azureadv2/azureadv2.go#L132))

---------

Co-authored-by: Kyle D <kdumontnu@gmail.com>

We have some instances that only allow using an external authentication

source for authentication. In this case, users changing their email,

password, or linked OpenID connections will not have any effect, and

we'd like to prevent showing that to them to prevent confusion.

Included in this are several changes to support this:

* A new setting to disable user managed authentication credentials

(email, password & OpenID connections)

* A new setting to disable user managed MFA (2FA codes & WebAuthn)

* Fix an issue where some templates had separate logic for determining

if a feature was disabled since it didn't check the globally disabled

features

* Hide more user setting pages in the navbar when their settings aren't

enabled

---------

Co-authored-by: Kyle D <kdumontnu@gmail.com>

Fixes#22722

### Problem

Currently, it is not possible to force push to a branch with branch

protection rules in place. There are often times where this is necessary

(CI workflows/administrative tasks etc).

The current workaround is to rename/remove the branch protection,

perform the force push, and then reinstate the protections.

### Solution

Provide an additional section in the branch protection rules to allow

users to specify which users with push access can also force push to the

branch. The default value of the rule will be set to `Disabled`, and the

UI is intuitive and very similar to the `Push` section.

It is worth noting in this implementation that allowing force push does

not override regular push access, and both will need to be enabled for a

user to force push.

This applies to manual force push to a remote, and also in Gitea UI

updating a PR by rebase (which requires force push)

This modifies the `BranchProtection` API structs to add:

- `enable_force_push bool`

- `enable_force_push_whitelist bool`

- `force_push_whitelist_usernames string[]`

- `force_push_whitelist_teams string[]`

- `force_push_whitelist_deploy_keys bool`

### Updated Branch Protection UI:

<img width="943" alt="image"

src="https://github.com/go-gitea/gitea/assets/79623665/7491899c-d816-45d5-be84-8512abd156bf">

### Pull Request `Update branch by Rebase` option enabled with source

branch `test` being a protected branch:

<img width="1038" alt="image"

src="https://github.com/go-gitea/gitea/assets/79623665/57ead13e-9006-459f-b83c-7079e6f4c654">

---------

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

1. Add some general guidelines how to write our typescript code

2. Add `@typescript-eslint/eslint-plugin`, general typescript rules

3. Add `eslint-plugin-deprecation` to detect deprecated code

4. Fix all new lint issues that came up

This enables eslint to use the typescript parser and resolver which

brings some benefits that eslint rules now have type information

available and a tsconfig.json is required for the upcoming typescript

migration as well. Notable changes done:

- Add typescript parser and resolver

- Move the vue-specific config into the root file

- Enable `vue-scoped-css/enforce-style-type` rule, there was only one

violation and I added a inline disable there.

- Fix new lint errors that were detected because of the parser change

- Update `i/no-unresolved` to remove now-unnecessary workaround for the

resolver

- Disable `i/no-named-as-default` as it seems to raise bogus issues in

the webpack config

- Change vitest config to typescript

- Change playwright config to typescript

- Add `eslint-plugin-playwright` and fix issues

- Add `tsc` linting to `make lint-js`

This PR only does "renaming":

* `Route` should be `Router` (and chi router is also called "router")

* `Params` should be `PathParam` (to distingush it from URL query param, and to match `FormString`)

* Use lower case for private functions to avoid exposing or abusing

Uses `gopls check <files>` as a linter. Tested locally and brings up 149

errors currently for me. I don't think I want to fix them in this PR,

but I would like at least to get this analysis running on CI.

List of errors:

```

modules/indexer/code/indexer.go:181:11: impossible condition: nil != nil

routers/private/hook_post_receive.go:120:15: tautological condition: nil == nil

services/auth/source/oauth2/providers.go:185:9: tautological condition: nil == nil

services/convert/issue.go:216:11: tautological condition: non-nil != nil

tests/integration/git_test.go:332:9: impossible condition: nil != nil

services/migrations/migrate.go:179:24-43: unused parameter: ctx

services/repository/transfer.go:288:48-69: unused parameter: doer

tests/integration/api_repo_tags_test.go:75:41-61: unused parameter: session

tests/integration/git_test.go:696:64-74: unused parameter: baseBranch

tests/integration/gpg_git_test.go:265:27-39: unused parameter: t

tests/integration/gpg_git_test.go:284:23-29: unused parameter: tmpDir

tests/integration/gpg_git_test.go:284:31-35: unused parameter: name

tests/integration/gpg_git_test.go:284:37-42: unused parameter: email

```

Fixes issue when running `choco info pkgname` where `pkgname` is also a

substring of another package Id.

Relates to #31168

---

This might fix the issue linked, but I'd like to test it with more choco

commands before closing the issue in case I find other problems if

that's ok.

---------

Co-authored-by: KN4CK3R <admin@oldschoolhack.me>

This PR implemented object storages(LFS/Packages/Attachments and etc.)

for Azure Blob Storage. It depends on azure official golang SDK and can

support both the azure blob storage cloud service and azurite mock

server.

Replace #25458Fix#22527

- [x] CI Tests

- [x] integration test, MSSQL integration tests will now based on

azureblob

- [x] unit test

- [x] CLI Migrate Storage

- [x] Documentation for configuration added

------

TODO (other PRs):

- [ ] Improve performance of `blob download`.

---------

Co-authored-by: yp05327 <576951401@qq.com>